( My own questions are in boldface. I italicize in chatGBT’s replies where two kinds of things inevitably begin to happen: (1) rote repetition of what it has already replied before and (2) self-contradiction. Eventually it becomes circular and uninformative. (I’m not saying that humans don’t do this too! But it’s all that chatGBT can do.)

Who invented the symbol grounding problem?

The concept of the symbol grounding problem was first introduced by philosopher and cognitive scientist Jerry Fodor in the late 1980s.

In what publication did Fodor introduce the symbol grounding problem?

Jerry Fodor introduced the symbol grounding problem in his 1987 book “Modularity of Mind.”

Where in his 1987 book “Modularity of Mind.” did Jerry Fodor introduce the symbol grounding problem?

Jerry Fodor introduced the symbol grounding problem in the first few chapters of his 1987 book “Modularity of Mind.” He discussed it as one of the central challenges in the field of cognitive science and artificial intelligence, and as a key issue that needs to be addressed in order to build truly intelligent machines.

Quote the passage in which Jerry Fodor introduced the symbol grounding problem in his 1987 book “Modularity of Mind.”

I’m sorry, I don’t have the full text of Jerry Fodor’s 1987 book “Modularity of Mind” available to me, so I can’t quote the exact passage in which he introduced the symbol grounding problem. However, the idea can be generally described as the problem of explaining how symbols (such as words or concepts) acquire meaning in the first place, and how they are anchored to sensory experiences and the physical world.

What does “anchored to sensory experiences and the physical world” mean?

“Anchored to sensory experiences and the physical world” refers to the idea that symbols (such as words or concepts) must have a direct connection to our sensory experiences and physical interactions in the world in order to have meaning. The symbol grounding problem asks how symbols can be connected to these experiences and interactions in a way that enables us to understand and use them effectively. In other words, how can symbols be “grounded” in our sensory experiences and physical reality, so that they have a clear and well-defined meaning that can be used in a meaningful way?

Can you give an example of a grounded symbol and how it is grounded?

Certainly! A classic example of a grounded symbol is the word “apple.” The word “apple” is grounded in our sensory experiences with the physical object that we call “apple.” When we see, touch, taste, or smell an apple, our sensory experiences provide a direct connection between the symbol “apple” and the physical object that it refers to. This connection is what gives the symbol “apple” its meaning. In other words, the symbol “apple” is grounded in our sensory experiences with the physical world.

So, when we use the word “apple,” we have a shared understanding of what it means because we have all had similar experiences with the physical object that the word refers to. This grounding in sensory experiences and physical interactions provides the basis for our ability to understand and use the symbol “apple” in a meaningful way.

Does the sensory experience have to be felt, or is it enough to just interact with the apple?

The sensory experience of an apple doesn’t have to be felt in the sense that one has to physically touch or taste it, although these are certainly sensory experiences that can contribute to grounding the symbol “apple.” The idea is that symbols are grounded in our sensory experiences with the physical world, and that these experiences can take many different forms. For example, seeing an apple, smelling an apple, hearing the sound it makes when bitten, etc. The sensory experiences that contribute to grounding a symbol can be direct or indirect, and they don’t all have to come from the same modality (e.g., vision, touch, hearing, etc.). The important thing is that they provide a direct connection between the symbol and the physical world, and that they enable us to understand and use the symbol in a meaningful way.

Can the symbols used by an insentient robot be grounded by its interactions with apples, even though the robot can only act but cannot feel?

In theory, yes, symbols used by an insentient robot could be grounded by its interactions with apples, even though the robot can only act and cannot feel. However, the nature of the grounding would be quite different from the grounding of symbols in human experience.

In human experience, symbols are grounded in our sensory experiences, emotions, and conceptual knowledge, which allow us to understand and use them in a flexible and context-sensitive way. For a robot, symbols would be grounded in its sensors and actuators, which enable it to interact with the physical world in a pre-programmed way. This would allow the robot to use symbols to perform specific tasks and make decisions based on its interactions with the physical world, but it would not allow the robot to understand symbols in the same way that humans do.

In essence, the grounding of symbols in human experience is much more rich and complex than the grounding of symbols in robot experience, because it involves the integration of many different forms of sensory and conceptual information, whereas the grounding of symbols in robots is limited to the information available to their sensors and actuators.

What is a robot?

A robot is a machine designed to perform tasks automatically. It can be controlled by a computer program, by a human operator, or a combination of both. Robots can be used for a variety of purposes, such as manufacturing, exploration, cleaning, and entertainment. They can be built in different shapes and sizes, and can have various sensors, actuators, and other components that enable them to perform specific functions. The field of robotics is concerned with the design, construction, operation, and use of robots.

What is the Turing Test?

The Turing Test is a measure of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. The test was proposed by the British mathematician and computer scientist Alan Turing in 1950.

The basic idea behind the Turing Test is that a human evaluator engages in a natural language conversation with both a human and a machine, without knowing which is which. If the evaluator is unable to consistently distinguish the machine from the human, based solely on their responses in the conversation, then the machine is said to have passed the Turing Test and demonstrated human-level intelligence.

While the Turing Test is widely regarded as an important concept in the field of artificial intelligence and cognitive computing, it is also a subject of much debate and has been criticized on various grounds. Nevertheless, it remains an important benchmark for evaluating the progress of AI and its ability to generate human-like responses.

What is the difference between a chatbot, like chatGPT, which only has contact with words, and a chatbot that is also a robot, and can interact with the referents of words?

The main difference between a chatbot like ChatGPT, which only has contact with words, and a chatbot that is also a robot, and can interact with the referents of words, is the scope of their abilities and the nature of their interaction with the world.

ChatGPT is a language model that has been trained on a vast amount of text data, and it can generate text-based responses to questions and prompts. It can have conversations with users through text-based interfaces, like a chat interface or a command-line interface. However, ChatGPT does not have direct access to the physical world and does not have the ability to interact with the referents of the words it uses in its conversations.

On the other hand, a chatbot that is also a robot can interact with the physical world and the referents of the words it uses in its conversations. For example, a robot chatbot that is designed to interact with objects in a home environment could be programmed to understand and respond to commands like “turn on the light” or “get me a glass of water.” By having access to and the ability to interact with the physical world, the robot chatbot would be able to perform actions and produce responses that are grounded in its interactions with the physical world.

In essence, while both types of chatbots can have conversations and generate text-based responses, a chatbot that is also a robot has the added ability to interact with the physical world and to perform actions that are based on its understanding of the words and commands it receives.

REPEAT: What is the difference between a chatbot, like chatGPT, which only has contact with words, and a chatbot that is also a robot that can learn, and can interact with the referents of words?

The main difference between a chatbot like ChatGPT, which only has contact with words, and a chatbot that is also a robot that can learn and interact with the referents of words is the scope of their abilities and the nature of their interaction with the world.

ChatGPT is a language model that has been trained on a vast amount of text data, and it can generate text-based responses to questions and prompts. It can have conversations with users through text-based interfaces, like a chat interface or a command-line interface. However, ChatGPT does not have direct access to the physical world and does not have the ability to interact with the referents of the words it uses in its conversations.

On the other hand, a chatbot that is also a robot that can learn has the ability to learn from its interactions with the physical world and to adapt its behavior accordingly. For example, a robot chatbot that is designed to interact with objects in a home environment could be programmed to understand and respond to commands like “turn on the light” or “get me a glass of water.” Over time, the robot chatbot could learn from its experiences and make improvements to its behavior, such as being more efficient in finding and retrieving objects.

In essence, while both types of chatbots can have conversations and generate text-based responses, a chatbot that is also a robot that can learn has the added ability to interact with the physical world, to learn from its experiences, and to adapt its behavior based on its interactions with the world. This would allow it to perform actions and produce responses that are grounded in its interactions with the physical world, and to continually improve its ability to interact with the world over time.

What about a chatbot, like chatGPT that can pass the Turing Test and is also a robot, that can learn as well as interact with the referents of words?

A chatbot like ChatGPT that can pass the Turing Test and is also a robot that can learn as well as interact with the referents of words would represent a combination of advanced language processing capabilities and advanced robotics capabilities.

Such a chatbot would have the ability to generate human-like responses to questions and prompts, as demonstrated by its ability to pass the Turing Test. Additionally, it would have the ability to interact with the physical world and to learn from its experiences, much like a traditional robot. This would allow the chatbot to not only generate text-based responses that are grounded in its interactions with the physical world, but also to continually improve its ability to interact with the world over time.

This type of chatbot would represent a highly advanced artificial intelligence system that would combine the best of both language processing and robotics technologies. It would be capable of engaging in human-like conversations and interactions while also having the ability to physically interact with the world and to learn from its experiences, making it a highly versatile and adaptive AI system.

Would the words of a chatbot that could pass the Turing Test and was also a robot that could learn and interact with the referents of words be grounded?

Yes, the words of a chatbot that could pass the Turing Test and was also a robot that could learn and interact with the referents of words would likely be considered grounded.

In the context of the symbol grounding problem, grounded symbols are those that are linked to sensory experiences and the physical world. A chatbot that could pass the Turing Test, was also a robot, and could learn and interact with the referents of words would be capable of generating text-based responses that are grounded in its interactions with the physical world. For example, if the chatbot was programmed to interact with objects in a home environment, it could generate responses such as “I turned on the light” or “I retrieved a glass of water,” which are based on its physical interactions with the world.

In this way, the words of a chatbot that could pass the Turing Test and was also a robot that could learn and interact with the referents of words would be considered grounded because they are linked to its sensory experiences and interactions with the physical world.

What if this chatbot/robot left the kitchen and went out into the world, and kept passing the Turing Test?

If a chatbot/robot that could pass the Turing Test and was capable of learning and interacting with the referents of words were to leave the kitchen and go out into the world, it would likely continue to generate grounded text-based responses based on its interactions with the physical world.

As the chatbot/robot explored its environment, it would be able to interact with new objects, learn from its experiences, and generate new grounded text-based responses. For example, if the chatbot/robot encountered a new object, such as a tree, it might generate a response such as “I see a tree,” which is grounded in its sensory experience of seeing the tree.

In this way, even if the chatbot/robot left the kitchen and went out into the world, it would continue to generate grounded text-based responses based on its experiences and interactions with the physical world. This would allow it to remain a highly versatile and adaptive AI system, capable of engaging in human-like conversations and interactions while also having the ability to physically interact with the world and learn from its experiences.

What do you mean when you say this chatbot/robot was “grounded”?

When I say that the chatbot/robot was “grounded,” I mean that its symbols, or words, were linked to sensory experiences and the physical world.

The concept of grounding symbols in sensory experiences and the physical world is central to the symbol grounding problem, which is the question of how symbols used in thought and language can be linked to the world. A grounded symbol is one that is directly linked to sensory experiences and physical objects, such that its meaning is derived from its connections to these experiences and objects.

In the case of the chatbot/robot, its words were grounded because they were based on its interactions with the physical world. For example, its words such as “I see a tree,” were grounded in its sensory experience of seeing the tree. In this way, the chatbot/robot was grounded because its words were linked to its experiences and interactions with the physical world.

What do you mean when you say this chatbot/robot was “programmed”?

When I say that the chatbot/robot was “programmed,” I mean that it was designed and built to operate in a specific way by a set of instructions or algorithms. The chatbot/robot was created with a specific set of functions and abilities, such as the ability to interact with the physical world and learn from its experiences.

Programming refers to the process of writing code or software that specifies the behavior of a computer or robot. The code or software defines the inputs and outputs of the system, as well as the processes that the system will carry out to achieve its goals.

In the case of the chatbot/robot, its behavior was determined by the code or software that was written to control its operation. The programming defined its abilities and limitations, as well as the way that it would interact with the physical world.

Therefore, when I say that the chatbot/robot was programmed, I mean that it was created and built with a specific set of functions and abilities, determined by the code or software that was written to control its operation.

Wouldn’t a chatbot/robot in the world that could learn be changing as a result of what it learned?

Yes, a chatbot/robot that has the ability to learn would change as a result of what it learned.

In machine learning, learning is the process by which a system improves its performance by processing data and adjusting its algorithms based on that data. In the case of a chatbot/robot, learning would involve adjusting its algorithms based on its interactions with the world, so that it could perform better in the future.

This means that as a chatbot/robot interacts with the world and experiences new things, it would update its algorithms and change its behavior accordingly. Over time, as it continues to learn and interact with the world, it would become more sophisticated and better able to perform its tasks.

Therefore, a chatbot/robot that could learn would change as a result of its experiences and interactions with the world, as it updates its algorithms and improves its performance.

So if the chatbot/robot could learn and ground its symbols in the world outside the kitchen, when would its Turing Test end? How long and much must it do to pass?

The Turing Test, as proposed by Alan Turing, is a measure of a machine’s ability to exhibit intelligent behavior that is indistinguishable from that of a human. The test involves a human judge who engages in a natural language conversation with both a human and a machine, and decides which of the two they believe to be the human. If the judge is unable to reliably distinguish between the machine and the human, then the machine is said to have passed the Turing Test.

In terms of how long and much the chatbot/robot must do to pass the Turing Test, there is no specific time frame or set of criteria that must be met. The Turing Test is subjective and depends on the judgment of the human judge. Some researchers and practitioners in the field of artificial intelligence might consider a chatbot/robot to have passed the Turing Test if it is able to consistently fool human judges in a substantial number of trials. Others might have different criteria for determining whether a chatbot/robot has passed the Turing Test.

Therefore, it is not possible to determine a specific time frame or set of criteria for when the Turing Test would end for a chatbot/robot. The test is ongoing, and the chatbot/robot would continue to be evaluated against human behavior for as long as it is in use. Whether it would pass the Turing Test would depend on the subjective judgment of human judges, and could change over time as the chatbot/robot continues to learn and interact with the world.

If there is no time-limit on the Turing Test (henceforth “TT”) for the learning chatbot/robot out in the world, why would there be a limit on the number of judges? And if any and every judge, regardless of how long the test or the number of judges, is unable to distinguish the performance of the chatbot/robot from that of any other human being, in what sense is the judgment “subjective”, and in what sense are the judges being “fooled”?

The number of judges used in the Turing Test is not a limiting factor in the sense that there is a specific number that must be reached in order for the chatbot/robot to pass the test. The use of multiple judges is typically seen as a way to increase the reliability of the test results by taking into account the subjective judgment of multiple individuals. If a chatbot/robot consistently fools a large number of human judges, it becomes more likely that the chatbot/robot is exhibiting human-like intelligence.

However, the judgment of the human judges is still subjective in the sense that each judge brings their own biases, opinions, and interpretations to the conversation with the chatbot/robot. They may also have different criteria for determining what constitutes human-like behavior. As a result, even if a chatbot/robot consistently fools a large number of human judges, there may still be some degree of disagreement about whether it has truly passed the Turing Test.

Regarding the concept of “fooling” the judges, if a chatbot/robot is able to consistently mimic human behavior in a way that is indistinguishable from that of a real human, then the human judges are being “fooled” in the sense that they are unable to reliably distinguish the machine’s behavior from that of a human. However, it is important to note that the chatbot/robot is not intentionally trying to deceive the human judges, but is instead simply exhibiting the intelligence that it has been programmed or learned to exhibit.

If no one can ever tell any difference between what the grounded chatbot/robot in the world can learn and do and what a human can learn and do, in what sense is that a subjective judgment and it what sense is everyone being fooled?

The judgment of whether a chatbot/robot has truly passed the Turing Test is subjective in the sense that it is based on the opinions and perceptions of the human judges. Each judge may have different criteria for what constitutes human-like intelligence, and their interpretations of the chatbot/robot’s behavior may be influenced by their individual biases and experiences.

If a chatbot/robot is able to consistently mimic human behavior in a way that is indistinguishable from a real human, it is said to have “fooled” the human judges in the sense that the judges are unable to reliably determine that they are interacting with a machine. However, this “fooling” is not a result of intentional deception on the part of the chatbot/robot, but rather a reflection of its advanced abilities and programming.

In cases where multiple human judges are unable to distinguish the chatbot/robot’s behavior from that of a human, the conclusion that the chatbot/robot has passed the Turing Test becomes more reliable. However, even in these cases, the judgment remains subjective as there may still be individual differences in opinions and criteria for what constitutes human-like intelligence.

I said that no one could tell the difference between the performance capacities of the learning, grounded chatbot/robot in the world and you said there was no time limit on the TT, so in what way are these judgments “subjective”? I understand that the chatbot/robot is not trying to fool anyone. So in what sense are they being “fooled”?

If a chatbot/robot is able to consistently mimic human behavior in a way that is indistinguishable from a real human, it is said to have “fooled” the human judges in the sense that the judges are unable to reliably determine that they are interacting with a machine, even though the chatbot/robot is not attempting to deceive them.

However, even if no one can tell the difference between the performance capacities of the chatbot/robot and a human, the judgment of whether the chatbot/robot has truly passed the Turing Test is still subjective because it depends on the criteria and standards used by the human judges to determine whether a machine’s behavior is human-like or not.

For example, some judges may consider a chatbot/robot’s ability to perform certain tasks as evidence of human-like intelligence, while others may place more importance on its ability to understand and respond to complex emotions and social cues. There may also be individual differences in the judgments made by each judge, based on their individual experiences and biases.

So even if no one is able to distinguish the performance capacities of the chatbot/robot from a human, the judgment of whether the chatbot/robot has truly passed the Turing Test remains subjective as it is based on the perceptions and opinions of the human judges.

If no one can tell the difference between X and Y, how is that subjective, and how are they being fooled?

The judgment of whether someone can tell the difference between two things is a subjective matter as it depends on the individual’s perception and evaluation. The idea of being “fooled” implies deception or intentional misinformation, which may not necessarily be the case in this scenario. If a person cannot distinguish between two entities, it may simply be a result of their limited ability to observe or process information, and not because of any intention to deceive.

I agree that Turing-Testing depends on perception — the perceptual capacity of humans. Maybe a metal-detector could distinguish robots from people. But that’s no longer just a matter of performance capacity.

Yes, that’s correct. The Turing Test as originally proposed by Turing is based on human perception and evaluation, and the capacity of a machine to imitate human-like behavior in text-based communication. However, other methods and techniques beyond just human perception can be used to distinguish between entities, such as in your example of a metal detector. These additional methods may be necessary to supplement the Turing Test in certain cases.

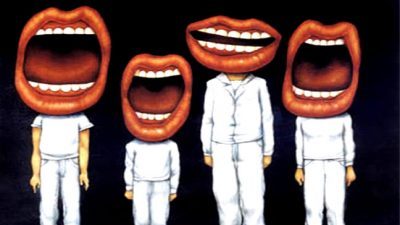

Is Turing-Testing a learning, grounded chatbot/robot still a matter of testing “text-based communication”? Is the TT meant to test for the presence of deception or the presence of human cognitive capacities? And if the TT has no limit on the number of tests and testers, and the chatbot/robot never fails, what is the “deception”?

The Turing Test is primarily a test of text-based communication, which is meant to evaluate a machine’s ability to exhibit human-like behaviors and mimic human intelligence in natural language conversations. The idea behind the test is to see if a human judge can distinguish between a human and a machine based on their responses to questions and prompts in a text-based communication. The test is designed to check for the presence of human-like intelligence and not necessarily the presence of deception. If a machine consistently passes the Turing Test, it could be considered a success in terms of its ability to exhibit human-like intelligence, but it does not necessarily mean that it is not deceiving. The concept of deception in the Turing Test is a subjective matter and can depend on how one interprets the idea of a machine being able to mimic human behavior and thought processes.

Is the TT a test of mimicry or a test of capacity? Is equivalent and indistinguishable capacity mimicry?

The Turing Test is primarily a test of capacity, but it can be seen as a test of mimicry as well. Equivalent capacity means that the chatbot/robot and the human being tested have the same ability to respond to stimuli and perform tasks, which can result in indistinguishable responses. In this sense, the TT can be seen as a test of mimicry. However, the main objective of the TT is to assess a machine’s capacity to demonstrate human-like intelligence, so it is primarily a test of capacity.

But if the capacity of the chatbot/robot (let’s call it the “candidate” from now on) is indistinguishable from that of any human, and the designers know how they produced this capacity, in what sense is it mimicry rather than capacity?

The Turing Test is often described as a test of mimicry, where the candidate chatbot/robot is expected to imitate human-like responses in text-based communication. However, the goal of the test is to determine if the candidate has the capacity to exhibit human-like intelligence in its responses. If the candidate is able to produce indistinguishable responses from a human and the designers understand how they have achieved this capacity, it could be argued that the candidate’s performance is not necessarily mimicry, but rather a demonstration of capacity. Whether the candidate’s performance is considered mimicry or capacity would depend on the interpretation and understanding of those who observe and evaluate it.

But in the example as we discussed it here, the only judgment being made is that no one can ever tell apart the candidate’s performance from any other human’s performance, So I ask again: where is the subjectivity or the mimicry or the “fooling” in any of that?

In the case of the Turing Test, the judgment of whether the candidate’s performance is indistinguishable from that of a human is subjective in the sense that it is based on the subjective perceptions and opinions of the judges. However, this does not mean that the candidate is necessarily “mimicking” human behavior or trying to “fool” the judges. The goal of the Turing Test is to determine if the candidate has reached a certain level of human-like intelligence, not necessarily to determine if it is human or not. The subjective nature of the test lies in the fact that the judges are evaluating the candidate based on their own experiences, biases, and expectations, which can vary from person to person. So, the judgment of whether the candidate has passed the test is subjective to some extent, but the performance of the candidate itself is not necessarily mimicry or deception.

Everyone has “experiences, biases, and expectations,” but the premise here is that no human can tell apart the candidate’s performance from the performance of any other human, ever. (And remember that the candidate is a robot, not just a chatbot, and that the performance is anything humans can do in the world.) So I ask again, isn’t this grounded robotic TT a test of capacity rather than an exercise in mimicry, constrained by the “subjectivity” of the judges (i.e., everyone)? If no human can distinguish the taste of vegan cheese from dairy cheese does that not teach us something about the nature of the taste (to a human) of cheese rather than a test of whether a human is being fooled by mimicry? Is the TT not trying to reverse-engineer human cognitive capacity (“intelligence”) rather than just trying to fool people?

The Turing Test is primarily meant to test a machine’s ability to demonstrate human-like intelligence through text-based communication. The subjective nature of the test lies in the judgment of the human evaluators, who use their own experiences, biases, and expectations to determine whether the machine’s responses are indistinguishable from those of a human. The test is not meant to test for a machine’s capacity to perform tasks in the world like a human, but rather its ability to mimic human-like conversation. Whether the machine’s responses can be considered mimicry or a demonstration of capacity depends on the underlying design and technology that produced the responses. However, the ultimate goal of the Turing Test is to assess a machine’s intelligence and ability to engage in human-like conversation, not necessarily to determine if the human evaluators are being “fooled” or not.

What is and is not a “machine”?

A “machine” is a physical device designed to perform one or more tasks, usually automated, through the use of mechanics and electronics. This can include traditional mechanical devices, such as a car engine, as well as computerized systems, such as a smartphone. So, anything that performs work using physical components, whether mechanical, electrical, or computational, can be considered a machine.

So are biological organisms machines?

Biological organisms are not traditionally considered machines, as the term is typically used to refer to artificial systems designed and constructed by humans for specific purposes. However, there are some theories and philosophical perspectives that view biological organisms as being akin to machines, such as reductionist biological theories that view living systems as being essentially complex systems of interacting physical and chemical processes. But in general, the definition of “machine” is highly context-dependent and can vary depending on the field and the purpose for which it is being used.