HARNAD: What is the relationship between the symbol grounding problem and the nature and evolution of natural language?

ChatGPT: The Symbol Grounding Problem (SGP) and the nature and evolution of natural language are intimately linked.

Symbol Grounding Problem (SGP): The SGP is a concept in cognitive science that concerns how symbols (or words) get their meanings. In essence, it’s about how words become more than just arbitrary labels and come to actually “mean” something. In other words, how do symbols or words become grounded in perceptual and actionable experience? For example, when we see the word “apple,” we don’t just recognize the letters and the sound. We also have a mental image of an apple, understand its properties, and know what actions can be performed with it. In the context of AI, the SGP becomes a challenge because AI often deals with symbols in a way that is not grounded – i.e., the symbols don’t have any inherent meaning to the AI beyond their programmed associations.

Nature and Evolution of Natural Language: Natural language has evolved in humans as a means of communication and thought. It’s believed to be grounded in our sensory and motor experiences. In other words, we learn the meanings of words and language through our interactions with the world, and this grounding in the world allows us to use language to express abstract thoughts, ask questions, give commands, and more.

In terms of their relationship, the evolution of natural language can be viewed as a solution to the SGP. As language evolved in humans, it became a way for us to ground symbols in our experiences and to communicate these grounded symbols to others. This grounding is not just a feature of individual words, but of the syntactic structures that allow us to combine words to express more complex meanings.

When it comes to artificial intelligence or cognitive science, understanding how language evolved to solve the SGP in humans can provide insights into how to create more sophisticated, understanding AI systems. If we can find a way to ground symbols in the experience of an AI—such as through interaction with the environment or sensory input—then we might make a significant stride towards AI that can truly understand and generate natural language in a human-like way.

However, as of my knowledge cutoff in September 2021, the full solution to the Symbol Grounding Problem in AI remains an open question and a topic of active research.

HARNAD: Do you remember our discussion of T3?

ChatGPT: Yes, our earlier discussion referenced T3 in the context of the Turing Test and the Symbol Grounding Problem.

T3, as described, involves not only linguistic capabilities but also sensorimotor capabilities—essentially, the ability of an entity to interact physically with the world. This could include seeing, touching, manipulating objects, navigating, and more. The idea is that an AI or robot passing the T3 test would demonstrate behavior indistinguishable from a human not only in verbal communication (T2) but also in embodied, physical interactions with the world.

In the context of the Symbol Grounding Problem, T3 might be seen as an approach to grounding symbols in an AI’s experiences. By interacting physically with the world, an AI might be able to build a kind of understanding or “grounding” for the symbols it uses. However, as of my knowledge cutoff in September 2021, developing an AI or robot capable of passing the T3 test remains an active area of research.

HARNAD: What is indirect grounding and how is it related to direct grounding?

ChatGPT: Direct grounding refers to the process by which a symbol or word gains meaning through its direct association with sensory or motor experiences. For example, the concept of an apple might be directly grounded in an entity’s sensorimotor experiences with apples, such as seeing them, touching them, tasting them, etc.

Indirect grounding, on the other hand, refers to the process by which a symbol or word gains meaning not through direct sensory or motor experiences, but through its association with other symbols or words that are themselves directly grounded. For example, the concept of a unicorn might be indirectly grounded in an entity’s experiences with the concepts of a horse and a horn, even if the entity has never directly experienced a unicorn.

In the context of artificial intelligence, direct grounding could involve training an AI on a dataset that includes both language data and corresponding sensory or motor data, allowing the AI to directly associate words with experiences. Indirect grounding, on the other hand, might involve training an AI solely on language data, allowing it to learn the meanings of words through their relationships with other words, without requiring direct sensory or motor experiences.

Both direct and indirect grounding approaches seek to address the symbol grounding problem, which is the question of how symbols (or words) get their meanings. A fully grounded AI system would presumably be capable of both direct and indirect grounding. However, as of my knowledge cutoff in September 2021, creating such a system remains an open challenge.

HARNAD: How is direct grounding related to sensorimotor category learning. It is not an “association” between words and “experiences.” It is an agent’s capacity to learn to do something: What?

ChatGPT: Direct grounding is closely related to sensorimotor category learning. It involves the agent’s ability to interact with its environment, process the sensory information it receives, and develop its own internal representations of the categories of objects and events it encounters.

In other words, direct grounding is about an agent learning to categorize its sensory input (such as visual, auditory, tactile information) into meaningful categories based on its own interactions with the environment. For instance, a child learns what an “apple” is not just by being told the word “apple”, but by interacting with actual apples – seeing them, touching them, tasting them. The word “apple” then becomes directly grounded in the child’s sensorimotor experience with apples.

In the case of an AI, it could learn a sensorimotor category by interacting with a simulated or physical environment. For example, it could learn the category of “circle” by interacting with various objects and identifying the common feature of “roundness”. This sensorimotor category can then be directly grounded by associating it with the corresponding symbol or word in its programming or training data.

So, to sum up, direct grounding in the context of sensorimotor category learning is about an agent’s capacity to learn to recognize and categorize its sensory inputs based on its own motor interactions with the environment. These categories can then be associated with corresponding symbols or words, enabling the agent to understand and use those symbols in a meaningful way.

HARNAD: How does indirect grounding depend on prior direct grounding?

ChatGPT: Indirect grounding, also referred to as symbolic grounding, refers to the ability to understand new concepts or categories by associating them with already established and directly grounded concepts. In other words, it involves defining new symbols (categories) in terms of previously grounded symbols.

Consider the scenario of a child learning language. Initially, the child learns to associate basic words with their real-world referents through direct sensorimotor experience – this is direct grounding. For instance, the child learns the word “apple” by associating it with physical apples they have seen, touched, and tasted.

Once the child has a foundation of directly grounded words, they can start to learn new words and concepts indirectly. They can understand the word “fruit” as a category that includes “apples” and other directly grounded words like “banana” and “grape”. This new category of “fruit” is indirectly grounded through its associations with the directly grounded categories.

Similarly, in AI systems, once a set of basic sensorimotor categories is directly grounded, new categories can be learned by associating them with the already grounded categories. For instance, an AI might learn the concept of a “vehicle” by understanding it as a category that includes directly grounded categories like “car”, “bus”, “bike”, etc.

In this way, indirect grounding leverages prior direct grounding as the basis for learning and understanding new categories. The ability to learn indirectly significantly expands an agent’s capacity to learn new concepts and categories beyond those it has directly experienced.

HARNAD: There are organisms (“agents”) on earth, and many are able to learn to recognize things in the world (e.g., apples) and they can also learn what to do with them (e.g., eat apples). To do this agents have to be able to learn to detect the features of apples that distinguish them from baseballs, which cannot be eaten. The learning produces a feature-detector, and this is what grounds the word in the capacity to recognize and manipulate its referent (e.g., apple).

Although it is true that in organisms it feels like something to be able to do this. So in that sense “experience” is involved. But since no one yet knows the causal role of feeling in category learning and symbol grounding — this is called the “hard problem” of cognitive science — there is no point talking about the role of felt experience in symbol grounding. So now can you explain symbol grounding to me without any mention of “association” or “experience, but just in terms of category-learning and feature detection?

ChatGPT: Sure, let’s approach the symbol grounding problem purely from a learning and cognitive perspective, focusing on categories and feature detection.

Grounding symbols (such as words) involves connecting them to real-world entities or concepts that they represent. This connection is created through a process of learning and recognition. This learning process is driven by the agent’s ability to distinguish between different categories based on the features of the entities belonging to these categories.

Direct grounding, as it pertains to category learning and feature detection, involves creating a link between a symbol and its referent, where the referent is something, the agent can interact with in the real world. For instance, an agent, through its sensorimotor capabilities, can learn to recognize an apple by its features such as its round shape, red color, sweet smell, etc. Once this categorization is achieved, the agent can then associate the symbol (the word “apple”) with this specific category of objects. Here, the symbol “apple” is directly grounded in the agent’s sensorimotor experiences with real-world apples.

Subsequently, the agent can build upon this foundation of directly grounded symbols to create indirectly grounded symbols. These are symbols that are not linked to a specific sensorimotor experience, but rather to other symbols (or combinations of symbols) that have been directly grounded. For example, the symbol “fruit” can be indirectly grounded by linking it with directly grounded symbols like “apple”, “banana”, etc.

Thus, in this context, symbol grounding is not about vague “associations” or subjective “experiences”, but about a concrete process of learning to recognize and categorize entities based on their observable features, and linking symbols to these categories. The learning and grounding processes are facilitated by the agent’s sensorimotor capabilities, allowing it to interact with and distinguish between different entities in the world.

HARNAD: Grounding words is not just learning to recognize and distinguish and name (kinds or categories of) entities (the referents of the words). I’s based on learning to detect and distinguish the features of the members of the referent category from the features of the nonmembers. It’s also based on learning what to DO or NOT-DO with the members and nonmembers (e.g., eat or don’t eat them). “Represent” is a vague weasel-word. What has to be in the head of an agent that has learned a new word’s referent in view of what I’ve just said?

ChatGPT: In the agent’s “head,” or more concretely in its cognitive and neural processing system, learning a new word’s referent would involve establishing a mapping between the sensory and motor features of the referent and the verbal or symbolic representation of the word. This mapping is the grounding of the word.

Detection of Sensory Features: The agent, through its sensorimotor interactions with the world, learns to recognize and discriminate the relevant sensory features of entities, such as their shape, color, smell, texture, etc.

Categorization: These sensory features are used to categorize entities into classes or kinds. For instance, entities with a certain combination of features (round, red, sweet smell) might be categorized as ‘apples.’

Action Responses: Alongside recognizing sensory features and categorization, the agent learns appropriate responses or actions associated with the entities. For instance, apples can be eaten, while baseballs cannot. These action responses are part of the agent’s understanding of the entity.

Symbol Mapping: Once the agent can detect the features, categorize entities, and knows the associated action responses, it can link or map the word (symbol) “apple” to this package of sensory and motor knowledge.

This grounding is not merely an “association” but a complex mapping process that links words with specific patterns of sensory inputs and motor responses. When the word is encountered again, it activates this package of sensory, categorical, and motor knowledge, guiding the agent’s recognition, understanding, and interaction with the referent of the word.

This is how the agent comes to “know” or “understand” the referent of the word. It’s not just about recognizing and naming entities, but about learning to detect and categorize entities based on their sensory features, and determining appropriate responses or actions in relation to these entities. This entire process is facilitated by the agent’s sensorimotor capabilities, allowing it to interact with and distinguish between different entities in the world.

HARNAD: That was a much better summary of what is going on in the head of the human or the T3 that grounds the words it speaks, hears or thinks. But I don’t understand “mapping.”

Suppose the only distinguishing features of apples and oranges were that they are round and that apples are red but oranges are orange, and you eat apples by biting them whereas you eat oranges by peeling them and then biting them, And supposing that’s the only thing the agent does in life: to recognize, name and eat oranges and apples. What does calling any of this “mapping” add to this story — either in the toy world of just apples and oranges, or in the real world, with a T3 that can recognize, name and do the right thing with the referent of every (content word) it knows?

(By the way, what is the difference between content or “open class” words and function or “closed class” words? Only content words have referents. What percentage of the words in a language is content words and function words.)

ChatGPT: “Mapping” in this context refers to the process by which the agent creates and uses an internal representation or model of the relationship between certain sensory and motor patterns and the corresponding symbolic representations (words). In the case of the apple and orange example, the “mapping” would be the internal mechanism by which the agent understands that when it perceives a round and red object that can be bitten directly, it’s dealing with an “apple,” and when it perceives a round and orange object that needs to be peeled before biting, it’s dealing with an “orange.” This mapping allows the agent to navigate its environment and correctly interact with different entities, recognizing them and predicting the appropriate actions to take.

Regarding content words and function words, these are categories used in linguistics to distinguish between types of words based on their purpose in language.

Content words (open class words) are words that have a semantic content and can be categorized as nouns, most verbs, adjectives, and adverbs. They are referred to as an “open class” because new words can be added to these categories as language evolves. They’re called “content” words because they contribute the main content or meaning to a sentence – they refer to things, actions, qualities, etc.

Function words (closed class words) are words that primarily have grammatical functions, such as conjunctions, prepositions, articles, pronouns, auxiliary verbs, etc. They are referred to as a “closed class” because new words are seldom added to these categories. Function words provide the grammatical structure to a sentence, linking content words and helping to express relationships between them.

The exact ratio of content words to function words can vary between languages and types of text, but in English, function words are more frequent in running text. However, in terms of unique words (vocabulary), content words greatly outnumber function words, as the latter are a limited set. Estimates vary, but some studies suggest function words can make up around 50-60% of total words in running text in English, but only a few hundred unique function words exist compared to tens of thousands of content words.

HARNAD: I still don’t understand what “mapping” actually means here. It sounds like in the toy world the agent just needs to learn some features and actions, kind of like a selective filter, that learns to detect the feature red or green, and if something is red, eat it, and if it’s orange, peel and eat it. In addition to the feature-detectors and the action-generators there could be a logical circuit: “If R then E, if O then P and E.” Or the input/output pattern could be hard-wired. And much the same would be true if it were all scaled up to T3 (do youremember that?). So the “mapping ” is as irrelevant as the “association” and the “experience”.

ChatGPT: You’re right that the process can be described in terms of features, actions, and if-then logical connections or “rules.” The term “mapping” is often used more generally in cognitive science and artificial intelligence to describe the relationship between two sets of things – in this case, sensory-motor patterns and symbolic representations. But if it is causing confusion, it’s certainly possible to describe the process without using that term.

To simplify, in the context of symbol grounding, the agent learns to detect the distinguishing features of different categories of things in the world, such as apples or oranges. It then learns the appropriate actions to perform with members of each category. This learning process might be characterized by a series of if-then rules like the ones you suggested. The word for each category, like “apple” or “orange,” is grounded in the agent’s capacity to recognize members of the category based on their features and to perform the correct actions with them.

The word itself could be thought of as a label for a set of related sensory-motor patterns and the corresponding rules for action. So, in a sense, the word gets its meaning from the agent’s interactions with the world, its ability to recognize and act appropriately with different categories of things.

In this sense, grounding is more than just associating a word with an experience or mapping it onto a sensory pattern. It’s about the agent’s learned capacity to interact with the world in a meaningful way based on its recognition of different categories of things and its knowledge of what to do with them. That’s what gives the words their meaning.

And yes, the same logic extends to T3, a robotic system that’s capable of both linguistic and sensorimotor interaction. It’s about more than just detecting and responding to sensory patterns; it’s about meaningful interaction with the world, which involves recognizing, categorizing, and acting appropriately.

HARNAD: That sounds much better, because simpler, removing the superfluous or empty parts. Now can we get back to how indirect grounding works (again with no superfluous words)?

Indirect grounding is verbal grounding. But all the (content) words in the verbal definition of the new indirectly grounded category (like “zebra”) have to already be grounded, both for the SPEAKER and the HEARER/LEARNER. How does this work?

ChatGPT: Certainly, let’s try to simplify indirect grounding:

Direct grounding, as we’ve established, involves an agent learning to recognize and interact appropriately with the things in the world. This gives meaning to the words that label these categories.

Indirect grounding, on the other hand, is a process that allows an agent to learn the meaning of new words based on their relationships to words that have already been directly grounded. In essence, it is learning by description or explanation, using language itself.

Here’s a simplified version of how it might work:

The agent already has a set of directly grounded words. These words are grounded in the agent’s capacity to interact with the things they refer to. Let’s say these words include “animal,” “stripes,” “black,” and “white.”

Someone tells the agent, “A zebra is an animal with black and white stripes.”

The agent, having directly grounded the words “animal,” “stripes,” “black,” and “white,” can combine this knowledge to form a new category, “zebra,” even if the agent has never encountered a zebra before.

This new word, “zebra,” is indirectly grounded. It is grounded in the agent’s knowledge of the other directly grounded words and the relationships between them, as explained in the definition.

This process relies on the shared understanding between the speaker and the hearer. The speaker, who knows what a “zebra” is, uses words that the hearer already knows (“animal,” “stripes,” “black,” and “white”) to explain this new concept. This shared language and the ability to explain new concepts using known words is a powerful tool for indirect grounding.

HARNAD: Now how is direct and indirect grounding related to what you, ChatGPT, can and cannot do?

ChatGPT: As a model developed by OpenAI, I, ChatGPT, have no capability for direct grounding. I do not have a body, sensory organs, or the ability to interact with the physical world. Therefore, I cannot learn the meaning of words through interaction with or perception of the physical world.

However, I do have a kind of indirect grounding. I have been trained on a large dataset of text from the internet, which includes many different contexts and uses for words. This training has enabled me to learn to predict what word or phrase is likely to come next in a piece of text, based on the words that have come before it.

This prediction capability allows me to generate responses to user prompts that often make sense and seem to reflect an understanding of the words used. However, this is not the same as true understanding or grounding. Rather, it’s a kind of statistical pattern-matching based on the data I was trained on.

For example, if you ask me about zebras, I can generate a response that includes information about zebras, because that information was present in the data I was trained on. I can “tell” you that a zebra is a kind of animal with black and white stripes, even though I have never seen a zebra or a stripe, because I’ve seen the words “zebra,” “animal,” “black,” and “white” used together in certain ways in the data I was trained on.

In summary, while I can generate responses that often make sense and seem appropriate in context, I don’t truly understand the words I’m using in the way that a human, or a hypothetical AI with direct grounding, would.

HARNAD: That was a good synthesis, but I think there is one very important error in it. You said:

“I, ChatGPT, have no capability for direct grounding. I do not have a body, sensory organs, or the ability to interact with the physical world. Therefore, I cannot learn the meaning of words through interaction with or perception of the physical world. However, I do have a kind of indirect grounding…”

But you don’t have indirect grounding, because, as you noted, indirect grounding depends on verbal definitions or descriptions in which all the (content) words used are already grounded, either directly or indirectly. The symbol grounding problem is based on the fact that this process cannot be “indirect” all the way down, otherwise you are back in the word-word circle that gave rise to the symbol grounding problem in the first place. So your words are not indirectly grounded, because none of them are directly grounded, so that they can indirectly ground all the rest through indirect grounding.

(You have no “minimal grounding set” that was grounded directly.) Yet your verbal syntheses are meaningful to human hearers who do have directly grounded words. You said that the reason this is possible is because of the patterns of words in your huge database. I agree. But I may not agree on what the patterns are and how they work: Can you explain them to me?

ChatGPT: I apologize for any confusion, and thank you for the clarification on the concept of indirect grounding. As a language model, I do not have any grounded words, either directly or indirectly grounded, because I don’t have sensory or motor experiences.

The patterns in my large dataset that enable me to generate responses to user prompts are statistical in nature. I’ve been trained to predict the next word in a sentence given all the previous words, based on patterns seen in the training data. This method, called transformer architecture, has been used to train me to generate human-like text.

For example, if in the training data the word ‘apple’ frequently follows the words ‘red’, ‘fruit’, ‘orchard’, I’ve learned to predict that ‘apple’ is a likely next word after these. I don’t “understand” these words or their connections in the way a human does, which is through direct or indirect grounding in sensory and motor experiences. Rather, I generate text based on the patterns of word usage in the data I was trained on.

To elaborate, during training, the model is exposed to pairs of sentences and learns to predict whether one sentence follows another in its training data. Through this, the model learns associations between words and context, enabling it to generate coherent and contextually appropriate responses. However, this process is entirely based on recognizing statistical patterns in data and does not involve any genuine understanding or grounded meaning.

So, when you interact with me, I generate responses based on predicting what a human would likely say next in a similar context, based on the patterns I’ve seen in the data. But this doesn’t mean that I truly understand the meanings of the words I’m using. I hope this clarifies things a bit more.

HARNAD: Yes, and of course you are right about how this works, based on statistical analysis of your huge database. But I have a hunch that there is more to it than that, and it has to do with the difference between a word and a sentence (which is also the difference between a referent category name and a PROPOSITION, which is a subject/predicate series of category names in the form of an assertion about what is the case; an assertion which may be TRUE or FALSE).

Single words do not have truth values. “Apple” is neither true nor false. It is simply an arbitrary symbol that speakers have agreed to use to refer to apples. (Only the deictic proposition “THIS is an apple” can be true or false.) The “shape” of “apple” is arbitrary in the sense that it does not resemble apples in any way. So it gives no clue to what its referent is “shaped” like. Nor is it causally connected with apples in any way (except in the head of a speaker or hearer that has grounded the word by learning to recognize and interact with its referent, using detectors of the “shapes” of the sensorimotor features of apples, as we’ve discussed).

Now the question to ask yourself is: Is the arbitrariness of the shape of “apple” in relation to the shape of apples also true of PROPOSITIONS — which are composed of subject/predicate definitions or descriptions of things — and their relation to the “shape” of the states of affairs they assert to be true?

ChatGPT: The arbitrariness of the word “apple” in relation to the physical object it represents is a fundamental aspect of symbolic language. The word “apple” doesn’t physically resemble an apple in any way, as you’ve pointed out. This is true for all individual words (or symbols) in a language, which is known as the arbitrariness of the sign.

However, when we combine words into sentences or propositions, things become a little more nuanced. The structure and syntax of a sentence isn’t entirely arbitrary because it reflects the logical and grammatical relationships between the entities it represents. In English, for example, a simple sentence generally follows the subject-verb-object structure. This structure is not randomly chosen; it reflects a logical progression of ideas and helps to convey meaning clearly.

Still, it’s important to recognize that while the structure of a sentence can hint at the relationships between the things it represents, it still doesn’t inherently resemble those things or their relationships in the physical world. This is known as the “symbol grounding problem.” We humans “solve” this problem by learning to associate words and sentences with our experiences in the world, but as an AI, I can’t do this.

The words and sentences I generate don’t reflect a grounded understanding of the world. Instead, they are based on statistical patterns in the text data I was trained on. When I generate a sentence, I’m not generating a representation of the world as I understand it (because I don’t have a grounded understanding of the world). Instead, I’m predicting what words and structures are statistically likely to come next in a given context, based on the patterns I’ve learned from my training data.

So in summary, while the structure of a sentence or proposition isn’t as arbitrary as an individual word because it reflects logical and grammatical relationships, it is still ultimately disconnected from the physical world it describes, at least in the context of an AI language model like me.

HARNAD: Yes, I agree that part of the coherence, comprehensibility and informativeness of the summaries and interactions with ChatGPT (henceforth “GBT”) for users is because of the statistical patterns in spoken and written strings of words and the algorithms that GBT applies to them. But I think there may be something more to it. Consider that if the word “apple” were really iconic, i.e., if it really resembled an apple, then non-speakers of English would still be able to learn more out of an iconic English dictionary than they can learn from a normal dictionary of the arbitrary words of English. (But this would only help for a relatively small number of visually recognizable objects and features.)

There are good reasons why words are not iconic, like hieroglyphics, and why iconicity would be a handicap: For one thing, language was spoken before it was written and it would be hopeless to try to produce an acoustic icon of the referent of every possible word. (It’s already nearly as impossible with visual or gestural icons). But there are languages (e.g., Chinese) in which the “iconicity” is of a higher order, in the basic characters (originally iconic) out of which all words are composed. I wonder whether GBT is even more effective in languages with that extra layer of correlation? I understand that results with coupling GPT with labelled image databases have been mixed.

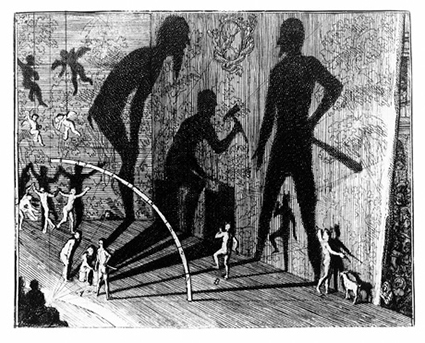

But what I want to add is that at the level of the formal structure of definitions and descriptions there may be a form of iconicity of form which is like the Platonic shadows of the meanings of propositions – faint, but present, and not just “statistical” but structural in some way.

ChatGPT: You bring up a number of interesting points. Let’s unpack them one by one.

Iconicity in Language: Yes, the majority of words in spoken languages do not physically resemble what they represent. This is the “arbitrary nature” of the linguistic sign as postulated by Ferdinand de Saussure, a linguist who laid the foundation for many of the assumptions of modern linguistics. While iconicity does exist in some forms of language, such as onomatopoeic words (like “buzz” or “bang”) or ideophones, it is not the norm. As for Chinese characters, they originally had a more pictographic nature, but have since evolved into more abstract symbols, with some retaining a semblance of their original iconic form.

Language and AI: When you mention the effectiveness of AI language models in languages with an extra layer of correlation, are you suggesting that these models might perform better in languages where there’s some level of iconicity? It’s an intriguing idea, although it would depend on how much that iconicity is preserved in modern usage. More research would be needed to see if this is the case.

Iconicity in Propositions: Here you’re suggesting that the structure of definitions and descriptions could have some form of iconicity, or resemble the concepts they describe. This is a fascinating concept. A sentence or proposition in language does have a structure that reflects some form of the logical or temporal order of the concepts it describes. In that sense, one could argue there’s a certain degree of “iconicity” in the formal structure of language. However, this is very different from the kind of iconicity where the sign physically resembles its referent. The kind of iconicity you’re suggesting at the level of sentences or propositions seems to be more abstract and related to the logical or conceptual relationships between the ideas being expressed.

As for how this applies to AI like me, I am designed to recognize and generate language patterns, which includes the logical and temporal relationships between words and phrases. This allows me to generate text that makes sense in the context of the conversation or topic at hand. However, I don’t “understand” these relationships in the same way humans do because my “understanding” is not based on any grounded experience or perception of the world.

HARNAD: I‘m pretty sure that there is formal/logical iconicity to be gleaned from large bodies of text and discourse out of the function words, which do not have referents, only syntactic rules. But I have a hunch that there might be even more (of this “iconicity” or “latent straucture”) with content words, preserving a shadow of the shape or structure of states of affairs (expressible as propositions). This would not “resemble” the shape of a referent category the way the feature-detectors in direct sensorimotor grounding do, but it might “resemble” the shape of verbal definitions and descriptions in indirect grounding. It would itself be ungrounded, lacking the direct grounding completely. But ungrounded descriptions may still retain SOME of the formal “shadows” of meaning. This is still too vague, however, and needs more thought and analysis.

ChatGPT: The idea of formal/logical iconicity is interesting. If I understand correctly, you’re suggesting that the structure of language, the way sentences are constructed and words are arranged, might inherently carry some degree of “meaning” – or at least, an echo or shadow of the meaning that they convey when grounded by a human mind.

This could be a potential direction for further research. It’s true that the structure and rules of language play a significant role in our understanding and interpretation of sentences. And perhaps it’s possible that AI, even without direct or indirect grounding, might be able to discern and leverage these structures in some way that goes beyond simple statistical patterns.

If this hypothesis were correct, it could have implications for the development of AI and natural language processing. It would suggest that there is a level of ‘understanding’ that could be achieved even without direct grounding, albeit a limited one.

It’s also worth noting, however, that this would still be a form of ‘understanding’ that’s quite different from human understanding, since it would lack the personal, subjective experience that we associate with our own understanding of the world.

HARNAD: On the subject of the platonic shadows of “meaning” that might be derivable from large bodies of continuous text:

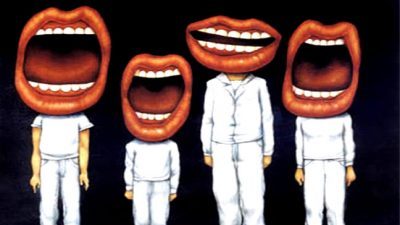

Are you familiar with Noam Chomsky’s suggestion that the constraints of Universal Grammar (UG) may not be grammatical constraints at all, but constraints on (verbal) thinking itself: that the syntactic structures that violate UG may sound wrong to us not because they are ungrammatical but because they are unthinkable? that a UG violation such as “John is easy to please Mary” or “Who did he think that went out?” might correspond to expressing “nonthinkable thoughts”? so that they in fact express no thought at all — though they may be close to thinkable thoughts (“It is easy for John to please Mary” or “Who did he think it was that went out?”)

Could it be because sentences can only express thinkable thoughts that there is some (perhaps small) degree of meaning in strings of ungrounded words spoken by grounded thinkers?

Chomsky has explicitly stated that UG violations are not violations of logic, or logical form. His famous example, meant to show the autonomy of syntax from semantics (i.e., independence of form from meaning) was somehow meant to illustrate this — “Colorless green ideas sleep furiously” – because it is grammatically correct but makes no sense.

But of course it does make sense, GPT, doesn’t it? Unlike “John is easy to please Mary,” which doesn’t make sense unless it is tweaked.

ChatGPT: These points on Noam Chomsky’s theory of Universal Grammar (UG) and the idea of “unthinkable thoughts” are interesting.

You’re right that Chomsky proposed that UG reflects constraints on possible human thought. In his view, UG is inherent in all human brains and it governs the structure of language, therefore determining what kinds of sentences are possible or “thinkable”.

This perspective raises suggest possible ways to understand how meaning might emerge from ungrounded text. Perhaps, as you suggested, there is some inherent “sense” or structure to sentences that obey UG, even if the sentences aren’t grounded in the way that human language is. After all, these sentences reflect possible structures of thought in the human mind.

As for Chomsky’s famous example of “Colorless green ideas sleep furiously”, I, as an AI, can analyze the sentence and tell you that it is grammatically correct. However, from a semantic perspective, this sentence doesn’t make conventional sense because it combines words in ways that violate our real-world understanding. That is, in the world as we know it, ideas can’t be colorless or green, nor can they sleep, and certainly not furiously. So while the sentence is structurally sound, it defies our understanding of how the world works. Yet, you’re correct that it still holds a certain “sense” or structure that is absent in sentences that violate UG.

The possibility that language may somehow carry a “shadow” of meaning even in the absence of grounding is worth exploring further.