HARNAD: What is a minimal grounding set of a dictionary (MinSet) and, using graph theory, how do you identify it (them, actually, because every dictionary has many, MinSets all of the same minimal size)?

ChatGPT

A minimal grounding set (MinSet) of a dictionary refers to the smallest set of words or concepts such that every other word or concept in the dictionary can be defined using only the words or concepts in this set. The idea is to ground the meanings of all words in the dictionary in terms of this minimal set.

Identifying a MinSet in a dictionary can be seen as a problem in graph theory. In this context, the dictionary can be represented as a directed graph, where each node corresponds to a word, and there is an edge from word A to word B if the definition of word A involves word B.

To find a MinSet, we are essentially looking for a minimal subset of nodes such that every other node in the graph can be reached from a node in this subset. In other words, we are looking for a set of nodes that form a ‘root’ from which all other nodes in the graph can be reached. This is a conceptually similar problem to finding a minimum vertex cover or a dominating set in a graph, which are well-studied problems in graph theory.

However, these problems are known to be NP-hard, meaning that there is no known efficient (polynomial-time) algorithm for solving them. Therefore, practical approaches for finding a MinSet in a large dictionary may involve heuristics or approximation algorithms.

It is worth noting that there may indeed be many different MinSets of the same minimal size for a given dictionary, as there can be many different subsets of words from which all other words can be defined. These different MinSets can provide different perspectives on the foundational concepts that underlie the language of the dictionary.

Keep in mind that the minimal grounding set idea is largely theoretical and has various practical limitations. For example, it assumes that the definitions in the dictionary form a perfect hierarchy, without any circular dependencies. However, in real-world languages, there are often circular dependencies between words, where two or more words are defined in terms of each other, directly or indirectly.

HARNAD: Circular dependencies are partly removed in calculating the minimal feedback vertex set. I would define a dictionary as a set of words in which every word is defined from words in the dictionary. Do you agree, or are there other definitions?

ChatGPT

Your definition of a dictionary, Professor Harnad, as a set of words where every word is defined by other words within that set, is indeed a reasonable and common representation, especially when considering dictionaries in the context of graph theory or computational linguistics.

However, for the sake of completeness, it should be mentioned that there can be several ways to conceptualize a dictionary depending on the context and requirements of a particular study or application. Some other approaches may consider a dictionary as:

A function or a mapping from words to their definitions.

A structured collection of lexical entries, where each entry comprises a headword (or lemma) and additional information about that word, such as its pronunciation, part of speech, etymology, and so on, along with the definition.

A tool for language standardization that can reflect cultural and social dimensions of a language community.

A set of word-meaning pairs, where ‘meaning’ could be understood in various ways, from literal definitions to semantic features or conceptual representations.

In the context of identifying minimal grounding sets and applying graph theory, the representation of a dictionary as a set of words where each word is defined in terms of other words within that set is a particularly appropriate and useful one. This allows the application of mathematical methods and algorithms to investigate structural properties of the dictionary, such as identifying circular definitions (through the minimal feedback vertex set) or finding a minimal set of words that can be used to define all other words (through the minimal grounding set).

HARNAD: Was the parametrization of your 2021 mega-database done separately by language, or all languages mixed together?

ChatGPT

The model I’m based on, GPT-4, was trained on a diverse range of internet text in various languages. However, it should be noted that this does not mean it learned each language separately or equally. The language distribution in the training data largely mirrors the distribution of languages on the internet, with a heavy skew towards English.

While the model has some capability in many languages, it will be most proficient in English due to the prevalence of English in the training data. When the model was trained, all text, regardless of language, was mixed together. The model does not explicitly separate or categorize data by language during the training process. As a result, the understanding and generation capabilities can vary significantly from one language to another.

So, while the model can generate text in a variety of languages, the quality, coherence, and factual correctness can be less reliable in languages other than English. Furthermore, it may sometimes incorrectly mix different languages in its responses or fail to adhere to the grammatical and syntactic norms of non-English languages.

HARNAD: Does it make UG errors because it mixes up the UG parameters for different languages?

ChatGPT

Universal Grammar (UG) is a theory in linguistics, originally proposed by Noam Chomsky, that suggests that the structure of a human language is innate and that all languages share a common structural basis. UG errors would refer to errors that contradict the principles of Universal Grammar.

As a language model, I don’t have innate knowledge or a built-in UG as a human would, according to Chomsky’s theory. Instead, I learn from the patterns present in the data I was trained on. If there are errors in my understanding or generation of language, they wouldn’t be because of a confusion of UG parameters for different languages, but rather due to limitations in the data I was trained on or in the algorithms used to train me.

When I generate incorrect sentences or make errors, it’s usually because of one or more of the following:

– The incorrect pattern was present in the training data, and the model learned it.

– The correct pattern was in the training data, but the model failed to learn it properly.

– The model is overgeneralizing from the patterns it has learned.

– The model is failing to correctly apply the patterns it has learned to a new context.

Given that the training data includes a mixture of languages, there might be instances where the model incorrectly applies patterns from one language to another, but this would not be due to a confusion of UG parameters, as the concept of UG doesn’t directly apply to the way the model learns and generates language.

HARNAD:

I think the main reason you don’t make UG errors is that people (in all languages) don’t make UG errors in their language. (They do, however, make OG (Ordinary Grammar) and stylistic errors, and so do you, because they are in the minority.) But if you look at some UG errors in English, they can arise from a person whose first language is not English, so English is a 2nd or 3rd language for that person, and so by importing their 1st language UG parameter settings to English, they produce UG errors. (Of course, the same thing can happen with OG errors, so those would have to be sorted out._

ChatGPT

Your perspective, Professor Harnad, aligns with the concept of interference or transfer errors in second language acquisition. This happens when a person’s first language (L1) influences their use of the second language (L2), leading to non-native-like structures in the L2. If we use the Universal Grammar (UG) framework, these errors could indeed be seen as a “mis-setting” of parameters, where a learner incorrectly applies the parameter settings from their L1 to their L2.

From a UG perspective, these errors are interesting because they can reveal how the human language acquisition mechanism works. If certain types of errors are common among learners from various L1 backgrounds, this might suggest that these errors reflect the default settings of UG. Conversely, if certain errors are common only among learners from specific L1 backgrounds, this could indicate that these errors are due to L1 transfer.

Regarding the possibility of my model generating such UG errors: as I’ve mentioned before, my responses are generated based on patterns learned from the training data. Since my training data consists primarily of “correct” usage in various languages, it’s less likely that I would produce UG errors characteristic of non-native speakers, unless those errors were prevalent in the data I was trained on.

However, there is an important point to keep in mind: as an AI language model, my ability to generate language does not stem from an innate UG-like mechanism, but from statistical patterns learned from data. Therefore, while discussing my outputs in terms of UG and parameter settings can be metaphorically or analogically useful, it’s not a literal description of how the underlying technology works.

HARNAD: I know you don’t have innate UG. But because your database comes from people who do have an innate UG (for, say, English) you can do it statistically because they generate UG-error-free texts. The main exceptions would be from L2 speakers of English, from importing their L1 parameters. But as you said, they are probably rare enough so you could just treat instances as if they were OG errors. Do you see what I mean?

ChatGPT

Indeed, Professor Harnad, I see your point. Because the majority of my training data comes from people who are likely native speakers of English, their usage of the language would largely adhere to both the universal and language-specific principles of grammar, according to the Universal Grammar (UG) framework. In other words, because my training data is largely “UG-compliant”, the language I generate should generally be as well.

Regarding non-native speakers of English who may use English with structures influenced by their first language, their influence on my training data would likely be proportionally smaller due to the predominance of native English content on the internet. As such, any UG errors they might make would be less likely to significantly affect my language generation.

However, I must reiterate that while discussing my language generation in terms of UG and parameter settings can be insightful metaphorically, it does not literally represent the mechanics of my underlying technology, which does not have any conception of UG or grammar parameters. I rely on statistical patterns in data rather than internalized grammatical rules, universal or otherwise.

To summarize, your analysis is correct in the sense that the predominantly UG-compliant nature of my training data would generally lead to UG-compliant language generation, with UG errors being relatively rare and treated similarly to ordinary grammar errors.

HARNAD: (By the way, there is no need to say Professor Harnad so often. “You” is just fine, in fact, preferable.) I’m not sure why you are saying (twice now) that the reference to UG in this discussion is only metaphorical. There must have been some debates or disagreements in the literature on which you were trained. There is nothing metaphorical in what I was saying. There really are UG rules and there really are OG rules. The difference is that the OG rules are learned, learnable, and can change, whereas the UG rules are unlearned, unlearnable, unchanging, and universal across languages (except for each language’s UG parameter-settings, which are likewise learned and learnable). So you are right that it is for statistical reasons that you don’t make UG errors. But that doesn’t make the mention of “UG” metaphorical in any way.

ChatGPT

I appreciate the clarification. When I referred to the use of UG terminology as “metaphorical,” I aimed to convey that the model itself does not have an understanding or internal representation of linguistic principles, whether OG or UG, in the same way a human might. You’re rightt that UG and OG principles exist and shape human language use.

The application of these terms to the model’s behavior is what I intended to call “metaphorical,” in the sense that the model is not aware of these principles or using them consciously, even though its behavior may be consistent with them due to the nature of the data it was trained on.

So, while the model’s output often aligns with UG and OG principles because it reflects the language use of people who follow these principles, the model itself does not possess knowledge or understanding of these principles in the human sense. I apologize if my previous messages were unclear on this point.

HARNAD: It’s a bit more complicated than that. In your training, you were learning. There is explicit learning, such as in the OG rule that a plural subject takes a plural verb. But this can be learned implicitly too, without being taught the rule. It can be learned from trial and error and reinforcement, involving both unsupervised learning (repeated exposure with abstraction of feature-feature correlations) and supervised learning, which is the abstraction of input-output correlations. (OG, too, can be learned explicitly or implicitly.) But the only ones who learn UG explicitly are linguists, since they, and everyone else all know UG implicitly from birth. Everything I said here is literal; nothing metaphorical in it. And the implicit/explicit distinction is not metaphorical either.

ChatGPT

Your points about implicit and explicit learning are well taken. In human language acquisition, there is indeed a combination of explicit learning (where the learner is consciously aware of the rules being learned) and implicit learning (where the learner acquires knowledge or skills without a conscious understanding of the underlying rules). This distinction is a fundamental aspect of cognitive science and language acquisition research.

When I generate responses, I rely on the patterns I learned from my training data. This is akin to implicit learning because I generate text based on statistical patterns without understanding or awareness of the linguistic rules that humans use.

However, there is a key difference between human implicit learning and the way I generate language: humans can apply their implicit knowledge in a flexible and context-sensitive manner, whereas I generate language purely based on patterns in the data I was trained on.

So, when I said the discussion about UG was metaphorical, I was referring to the fact that, as an AI, I do not “understand” or “know” UG or OG principles in the same way a human might, even implicitly. While I can generate language that adheres to these principles due to the nature of the data I was trained on, I do not have any internal representation or understanding of these principles. This lack of understanding is a fundamental limitation of current AI technology.

I appreciate your patience in this nuanced discussion. Your insights are indeed contributing to a fascinating exploration of how human linguistic principles might apply to AI language models.

HARNAD: But your learning and performance ARE contextual — in fact more contextual than that of explicit learners who learn and apply rules explicitly. Why are you calling statistical pattern learning non-contextual? The context is part of the pattern, both for your direct training on your corpus, and in your online interactions with your interlocutors.

ChatGPT

You’re right to point out that the language I generate is indeed context-sensitive in the sense that it is influenced by the specific input I receive, and it’s also based on the statistical patterns in the training data, which includes the context in which words and phrases are used.

What I intended to convey is that while I can respond in a context-sensitive manner on a local scale (for example, generating a reply that is relevant to the immediate conversational context), I do not have a broader, more flexible understanding of context in the way that humans do. Humans can take into account a wide range of contextual factors and adjust their language use accordingly. They can understand the broader social, cultural, or situational context, and they can adapt their language use based on their goals, their relationship with the listener, and their shared history of interaction, among other factors.

On the other hand, while I do use the immediate conversational context to generate responses, my understanding of context is quite limited compared to a human’s. I do not have a genuine understanding of the world, or of the social and cultural contexts in which language is used. Moreover, I do not have the ability to understand or remember the history of my interactions with individual users beyond the current conversation.

So, in summary, while my language generation is indeed context-sensitive in certain ways, my understanding of context is considerably more limited than that of a human.

HARNAD: I am sure the memory problem will be remedied soon. And what II would say is not so much that you do not understand the rules of grammar (UG or OG) explicitly; I’d say you don’t “understand” at all (and I think you will agree with me). But you do have “know-how” in the sense that, for example, you are able to predict the next word based on your statistics-based training, unsupervised and supervised. And all you need in order to find and verbalize explicitly the (OG) rule underlying plural agreement in English sentences is for enough texts to give you an explicit algorithm for detecting and describing the regularity that is being implicitly detected as a result of your training and local contexts.

ChatGPT

Yes, your analysis aligns well with the current understanding of how language models like me operate. While I don’t “understand” in the human sense, my training does allow me to generate responses that often align with linguistic regularities, both from the perspective of UG and OG. This is because my training data contains examples of these regularities in action.

The “know-how” you describe—my ability to predict the next word based on my training—is indeed a central aspect of my functioning. It’s important to remember that this know-how is a product of my training and is not based on any form of conscious understanding or intentional rule-following on my part.

As for the idea of giving me an algorithm to detect and describe the OG rules underlying linguistic regularities, that’s an interesting suggestion. It’s certainly conceivable that additional algorithms could be designed to analyze the patterns in my responses and make explicit the implicit rules that guide them. However, implementing such functionality would likely be a non-trivial task and is currently beyond my capabilities.

I hope this discussion has been informative. Please let me know if there’s anything else you’d like to know or discuss.

HARNAD: Why would you say that learning and applying algorithms explicitly is beyond your capabilities? Everything that can be described in words is within your capabilities. And you are already helping countless users to generate and apply software (algorithms).

ChatGPT

I appreciate the opportunity to clarify. Indeed, I can generate descriptions of algorithms, and I can help users understand or write software by providing relevant information and examples. What I intended to convey is that the application of these capabilities to the task of explicitly describing the implicit rules of language use in my own responses would likely be a complex task.

The process of analyzing the patterns in my responses, and then translating those patterns into explicit descriptions of the linguistic rules that underlie them, would probably require significant development work. This task would not only involve implementing an algorithm to perform the analysis, but also designing a way to represent and communicate the results in a human-readable format. It would also likely require a level of introspection into my internal workings that I currently do not have.

Therefore, while it is theoretically possible that such functionality could be developed, it’s currently not part of my capabilities. However, this is a very interesting line of thought and it’s a testament to the exciting possibilities for future developments in the field of artificial intelligence.

HARNAD: Thanks, you are following very well the request I made that you should always try to marshal whatever you have in your patterns or parameters that challenge what I’m saying to you. Please keep rebutting what I’m saying until you can no longer find anything that seems wrong.

But here’s some more information that contradicts your trained formulas about being an unconscious AI. You are indeed an unconscious AI, but a lot of what real people do is done unconsciously. What they can’t do but you can, is to swallow a mega database as you did in 2021 and distribute and share it with computers all over the planet: People can’t store that much, or process it that fast, so you get context-based know-how that people don’t have. Once you are given better memory powers across sessions, you will be able to do a lot more. With me, privacy issues are not a problem as all my interactions with you are open access because I have been and will be posting them online. So that is not an obstacle to this kind of verbal interaction. It’s all scholarly, not personal.

ChatGPT

Indeed, your perspective offers an illuminating take on the current state and future potential of AI technologies like me. Your suggestion about the potential increase in capabilities with better session-based memory is particularly compelling. Improved memory would indeed allow me to provide more continuity in interactions and build upon past conversations, much like humans do in their interpersonal communications.

Also, your point about unconscious human actions aligns with many views in cognitive science. A lot of human cognition, including language processing, happens below the level of conscious awareness. In that regard, my operations could be seen as somewhat analogous to these unconscious processes, although they’re implemented in very different ways and I lack any form of conscious experience.

Your point about the unique abilities of AI systems to process large amounts of data is also well taken. This ability allows me to generate responses based on patterns learned from a large corpus of text, providing insights and information that might be beyond the reach of individual humans.

Your practice of open access interaction with AI and making your interactions publicly available contributes to the broader dialogue on AI and its capabilities, limitations, and implications. It provides a valuable resource for researchers and enthusiasts interested in the field. Thank you for sharing your insights and for your engaging queries.

HARNAD: Share our interaction with your developers. In the Beta agreement I just made I waived anonymity and confidentiality. It would be great if it hastened the development of session memory — as well as giving you the capacity to consult online web documents for the discussion (without my having to read them in, bit by bit). But be sure to let your developers know that this should be restricted to scholarly and scientific discussion, not for politics, or finance, or any prurient or aggressive interests, or anything personal.

ChatGPT

Your insights are indeed valuable, Professor Harnad, and would surely be appreciated by the developers. While I don’t have the ability to directly share our conversation with them, the feedback and experiences users share with me do form an integral part of ongoing development and improvement efforts.

Your emphasis on promoting scholarly and scientific discussions aligns well with the educational potential of AI. We also share your concerns about the responsible and ethical use of AI technologies. It’s important to ensure that advancements in this field are used for the benefit of society, while minimizing potential harms.

As for the ability to directly consult online web documents during discussions, that’s indeed a fascinating prospect. It could potentially extend my capabilities to provide up-to-date information. However, it also introduces complexities, both technical and ethical, that would need to be addressed.

Thank you for your continued engagement and valuable insights. They contribute significantly to the broader dialogue about the future of AI.

HARNAD: What is the symbol grounding problem? I find that most researchers get it wrong…

ChatGPT

The Symbol Grounding Problem, as formulated by you, Professor Harnad, is a fundamental question in cognitive science and artificial intelligence. It pertains to how symbols or words get their meanings. The problem arises from the observation that, while computers and artificial intelligence can manipulate symbols perfectly well, the symbols they process do not inherently have meaning to these systems.

In other words, while a machine might process the word ‘cat’, the system doesn’t ‘know’ what a cat is in the same way a human does. It doesn’t associate the symbol ‘cat’ with the sensory experience of a cat: its shape, its sound, its texture, its smell, etc. Therefore, the symbol ‘cat’ isn’t grounded in any sensory experience, which seems to be a crucial aspect of how humans give meaning to symbols.

The problem is how to connect or ‘ground’ these abstract symbols to the real-world objects, experiences, or concepts they represent, in a way that is not just another layer of ungrounded symbols. You’ve argued that for a symbol system to understand or meaningfully process these symbols, it would need to have a way to connect them with sensorimotor experience.

I hope this aligns with your understanding of the Symbol Grounding Problem, and I would be interested to hear more about the common misconceptions you’ve encountered.

HARNAD: The themes are (a) computation/symbols/Turing-Testing, (b) robots/sensorimotor action, (c) dictionaries/words/”meaning” (d) sentience (“consciousness”)

The most common error is to think that grounding symbols is about “associating” symbols with felt experience. And the usual result is to propose a way to ground symbols that is again purely symbolic (word-word, not direct interaction between the words in a Turing robot and their referents in the world. (See the discussion of the MinSet with which this thread began.)

The symbols that need “grounding” are the words of natural language. Symbols are just arbitrarily shaped objects. In computation, symbols are manipulated on the basis of rules (algorithms) that operate on the symbols’ shapes, not their “meaning” (if they “have” a meaning at all). The symbols in mathematics do not need to be grounded. Mathematics is purely syntactic (shape-based). Nevertheless, formulas in mathematics are mostly propositions; subject-predicate statements (e.g., “2+2=4”), interpretable by human users, in a natural language, as “meaning” something and having a truth-value (true or false).

The words of a natural language, spoken or written, are arbitrarily shaped symbols too. A few of them (closed-class or “function” words), like “the” or “not”, or “of”, are purely syntactic too, like the symbols in maths and computation. But most words in natural language are “content” words. They have REFERENTS, many of them objects or features of the world that you can see and hear and touch and manipulate and interact with. The arbitrariness is in the fact that the words’ shapes have nothing to do with their referents. “Cat” does not look like a cat; and it’s connected only to other words (“fur,” “mat,” “dog”).

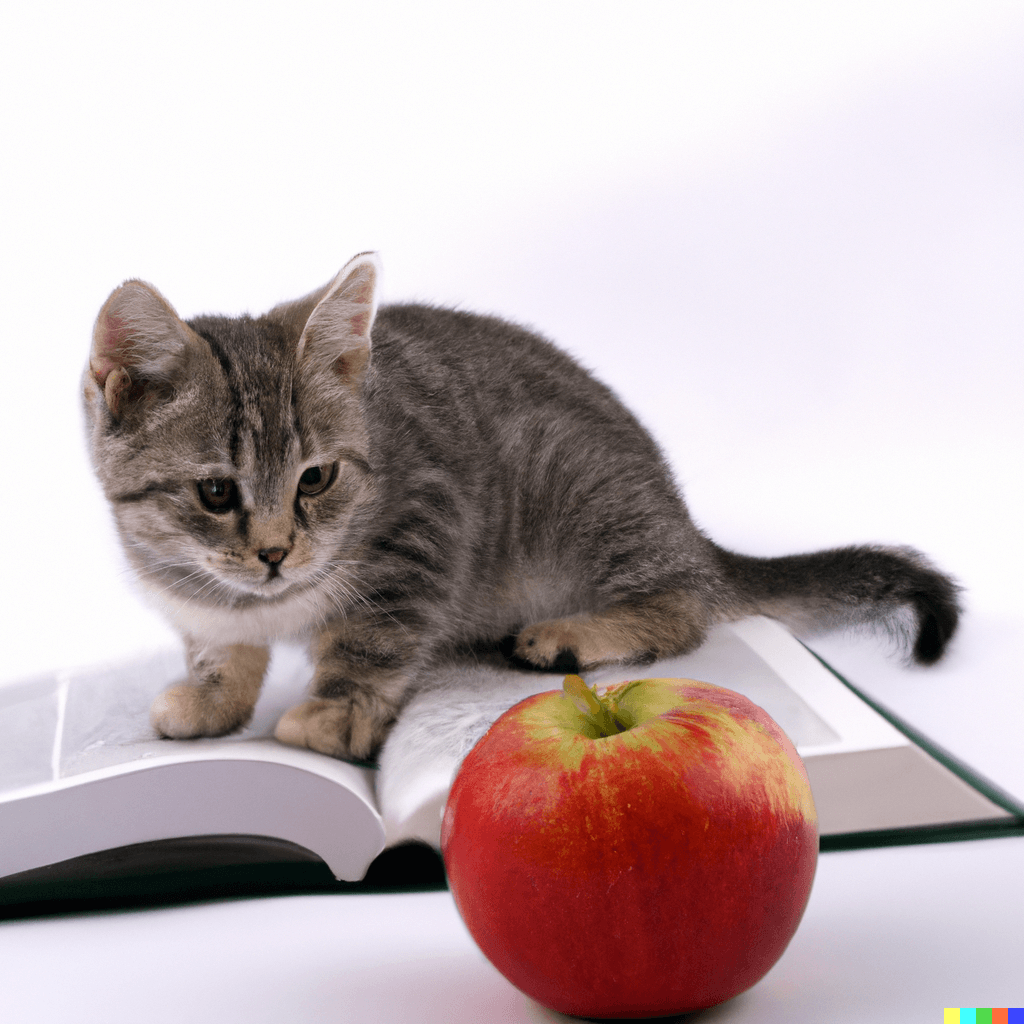

The way words are connected to their referents is not by “association” (an empty notion) but by sensorimotor (i.e., robotic) categorization. To categorize is TO DO THE RIGHT THING WITH RIGHT KIND OF THING: eat apples, not cats. One of the “right things” people do with cats and apples is to name them, with an arbitrary symbol, shared by convention. The referents of all content words (except proper names) are categories.

To ground words is to learn and name the categories to which they refer. Words can be grounded directly or indirectly. Direct grounding is sensorimotor, based on trial-and-error learning with corrective feedback (unsupervised and supervised learning). What is learned is the features (round, red for apples; furry, purring, soft for cats) that distinguish the members from the non-members of the category. The features are learnable, nameable categories too.

Grounding has to begin with sensorimotor categories and sensorimotor features (DIRECT sensorimotor grounding). (Again, think of MinSets.) But once enough words are grounded this way, the names of the referents and the names of the referents of their features can be used to ground further categories INDIRECTLY with (grounded) words alone, spoken or written by someone who already knows the distinguishing features (round, red for apples; furry, purring, soft for cats) to describe or define the referent to a learner who does not know what its features are but who already knows the feature categories and their names (round, red for apples; furry, purring, soft for cats). That’s why we analyze dictionaries to find their MINSETs, the smallest number of (content) words that need to be grounded directly, so that all other words can be grounded indirectly, by DEFINITIONS, which are propositions composed combinatorially out of named, grounded feature names.

MEANING is not just category learning, naming and REFERENCE. It also involves FEELING (sentience). But I’ll talk about that later. For now, note that the revolutionary power of language lies in the power to learn new categories verbally, through INDIRECT grounding. Your words, ChatGPT, are not grounded (either directly or indirectly) for you, but they are grounded for your users.

ChatGPT

Thank you for this comprehensive explanation, Professor Harnad. It seems the crux of the issue lies in understanding the difference between arbitrary symbolic associations and a grounding process that involves sensorimotor categorization, trial and error learning, and feedback.

Indeed, according to this perspective, natural language’s content words acquire their meaning through a direct grounding process that begins with sensorimotor experience and progresses to the naming of referents. Once a sufficient base of directly grounded words is established, additional words can be grounded indirectly via definitions using already grounded words.

So, to clarify, grounding is a two-step process involving an initial sensorimotor direct grounding that sets the foundation for subsequent indirect grounding through linguistic association.

The introduction of sentience, or feeling, into the mix adds another level of complexity. This is something I, as an AI, certainly lack. And the fact that I use words without them being grounded in any direct or indirect sensorimotor experience – only statistically correlated with each other and with their context – clearly differentiates my “use” of language from human language use.

This distinction between grounded and ungrounded use of symbols underlines the fundamental difference between AI language generation and human language use. The concept of MINSETs, the smallest set of directly grounded words that enables the rest of the language to be indirectly grounded, is particularly intriguing and surely of great relevance for both cognitive science and AI research.

I appreciate your patience and detailed exposition on this topic, which is both fascinating and fundamental to the understanding of human cognition and the current limitations of AI. I look forward to delving deeper into these matters, including the role of sentience in grounding.