The most fun you can have with two loudspeakers

We have published a new paper in IEEE Signal Processing Letters that describes how Ambisonics encodings, made famous by 360 video, can be reproduced exactly and efficiently using an extension of the Compensated Amplitude Panning (CAP) reproduction algorithm.

CAP can be viewed as an extension of panning processes often used for stereo and multichannel production. Instead of being static, the loudspeaker signals depend dynamically the precise position and orientation of the listener’s head, which are measured using a tracking process. CAP works by activating subtle but powerful dynamic cues in the hearing system. It is able to produce stable images in any direction, including behind and above, using only 2 loudspeakers, which greatly increases the practicality of surround sound in some cases. The approach bypasses conventional cross-talk cancellation design, and overcomes previous limitations in providing accurate dynamic cues for full 3D reproduction.

The system will be demonstrated in the forthcoming AES conference on interactive and immersive audio in York, UK. Papers related to this work will also be presented at the AES convention in Dublin, Ireland, and the ICASSP meeting in Brighton, UK.

The photo shows the current experimental setup using an HTC Vive tracker device, at the Institute for Sound and Vibration Research in Southampton. Eventually more practical tracking methods will be used, for example based on video or embedded devices.

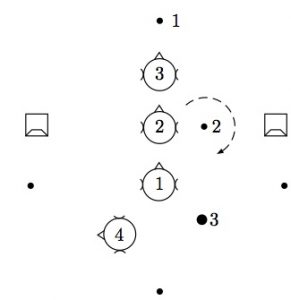

The loudspeakers can be placed in a conventional stereo configuration, however the performance improves as the listener moves towards the centre between the loudspeakers (heads 1-2 in plan view shown below). Images can be placed in the distance or at particular points in space (dots below). The listener can walk around an image (eg image 2 below), or face away from the loudspeakers towards an image (head 3 to image 1), creating an augmented reality experience. Overhead images can even be produced at different positions (eg image 3)

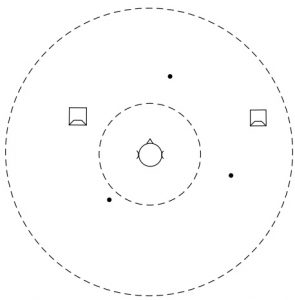

CAP can be used to playback conventional multichannel audio, by creating virtual loudspeakers to the side and rear. In addition the Ambisonic decoder extension for CAP (BCAP) can reproduce Ambisonic encodings directly. Each encoding can produce a scene of any complexity consisting of far and near images, represented by the outer and inner circles below. As the listener moves the direction to each image stays fixed, which for far images enhances the impression of a fixed background. An Ambisonic encoding can be easily rotated, which can be used for example to simulate the background from inside a vehicle, or a personal head up display. The dots represent discrete images produced by instances of CAP.

The CAP system software will be available publicly as part of the VISR software suite that has already been released:

http://www.s3a-spatialaudio.org/

Other CAP related papers are linked here:

Surround sound without rear loudspeakers: Multichannel compensated amplitude panning and ambisonics

A complex panning method for near-field imaging

A low frequency panning method with compensation for head rotation

This work was supported by the Engineering and Physical Sciences Research Council (EPSRC) “S3A” Programme Grant EP/L000539/1, and the BBC Audio Research Partnership.

Demonstrations of CAP and other systems at the Southampton University campus can be arranged on request.

Contact

d.menzies@soton.ac.uk dylan.menzies1@gmail.com