Today we can announce that the first version (0.1) of the Capturing the Contemporary Conducting data is now live on Repovizz. We aim to make further data available during 2020, including c3d files from this blog.

The three datapacks can be found in the CtCC folder on the Repovizz site. They are CtCC_S1P1_01, CtCC_S2P1_01 and CtCC_S3P1_01.

Further technical details will soon be available, but each data pack contains the following:

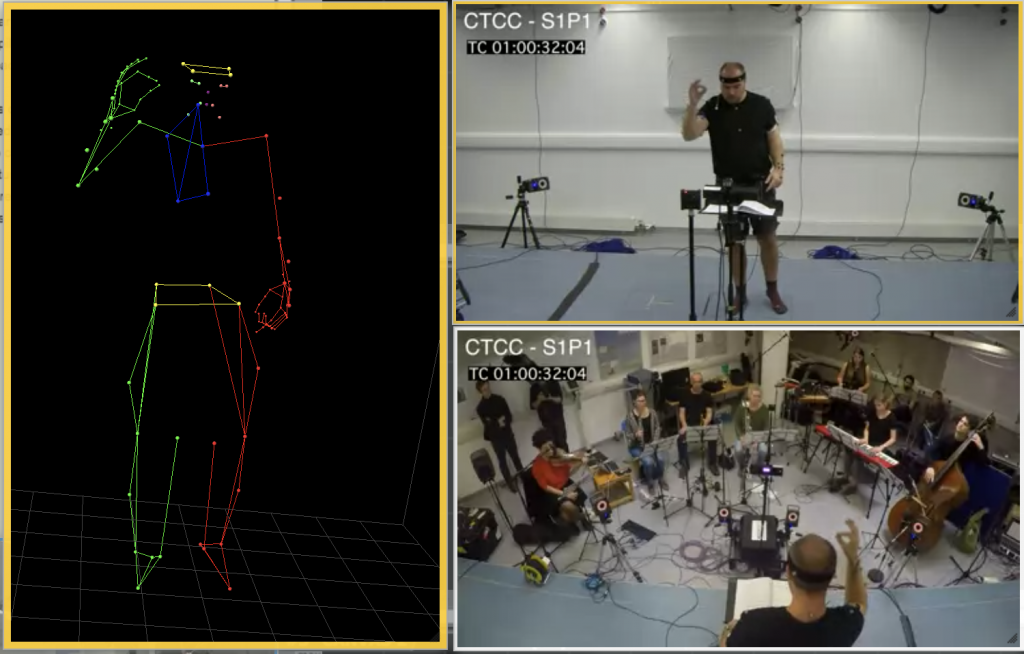

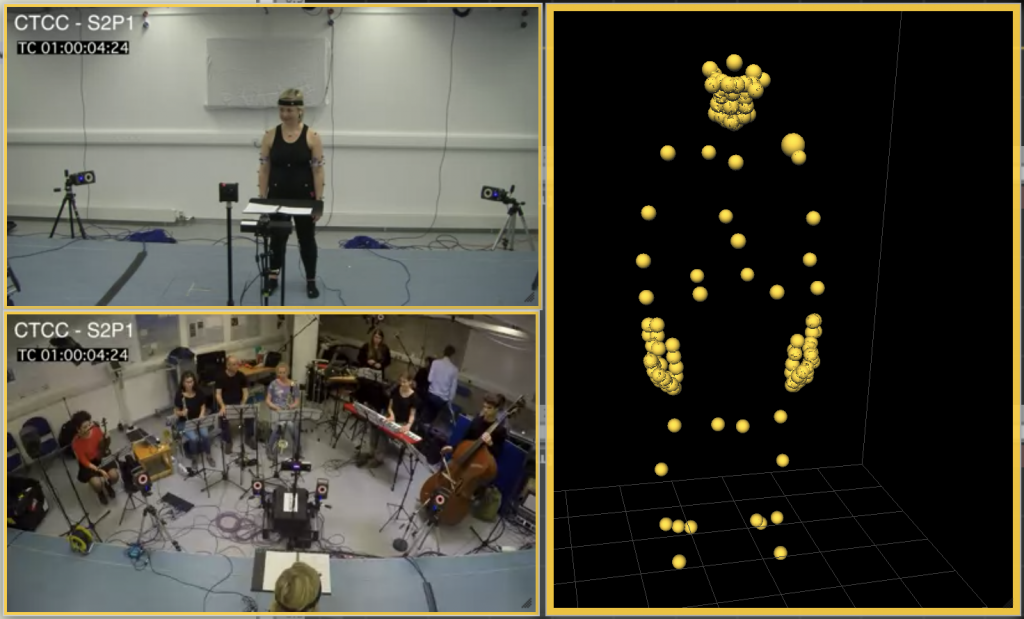

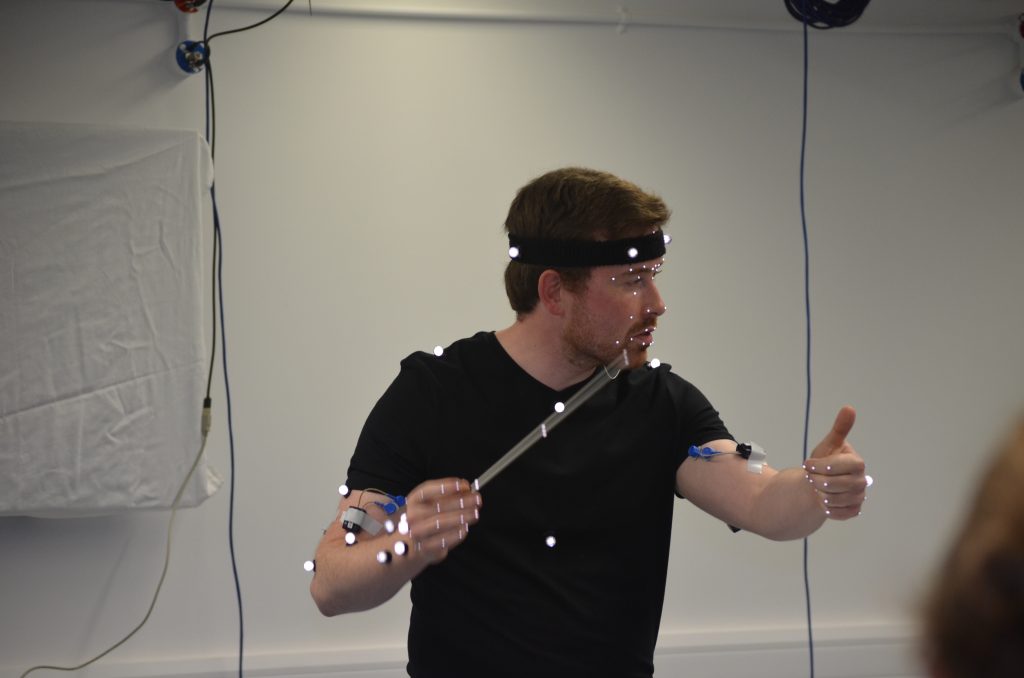

Mocap marker data: plugin-gait (plus additional leg markers), HAWK hand markers for both hands, a subset of the Southampton Face Marker Placement Protocol (some cleanup is still required for the remaining markers). 100Hz. Bones included to help visualise the data.

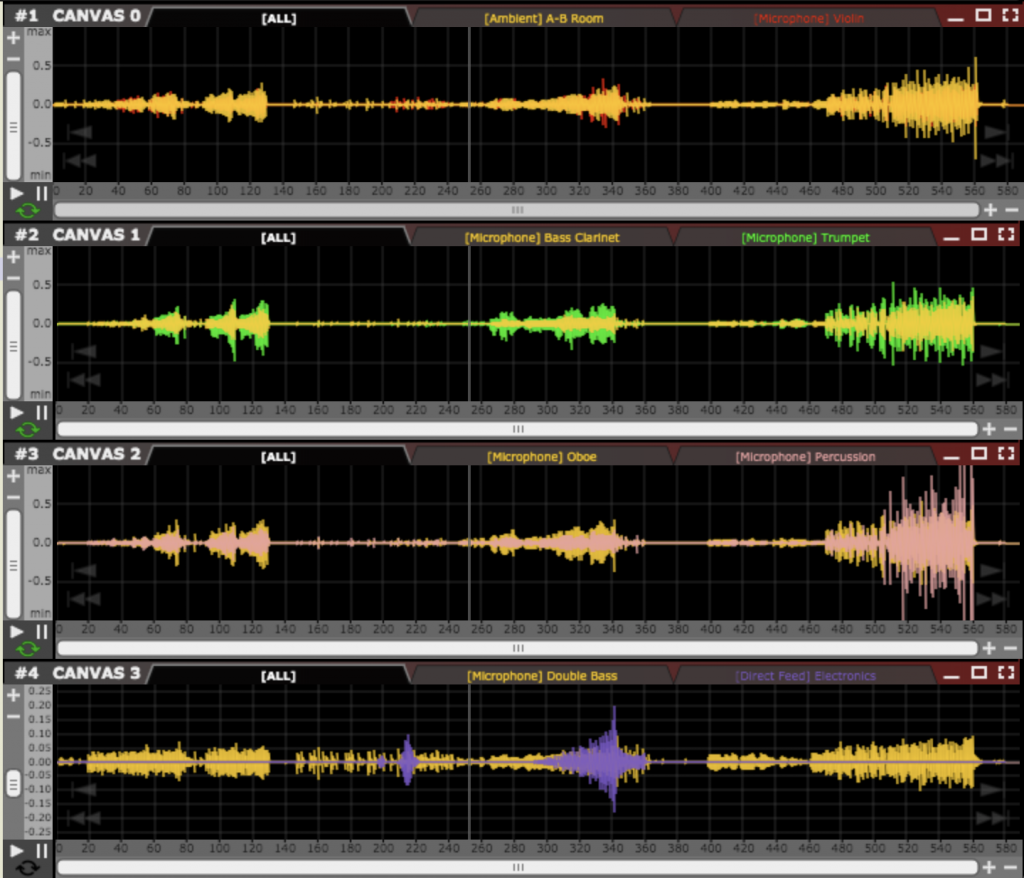

Audio data: a stereo mix; room stereo pair; individual mics for: oboe, violin, trumpet, percussion, double-bass, bass-clarinet; direct feeds of electronic part, Rhodes piano and click-track. All stereo (apart from click-track), 24bit, 48kHz.

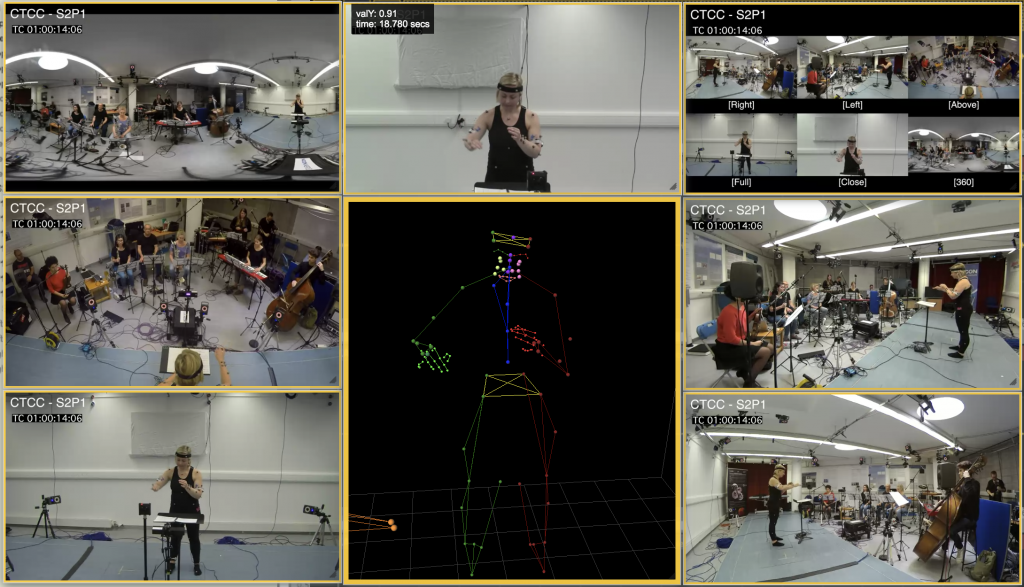

Video data: conductor close, conductor full, ensemble from above conductor, 360 degree (unwrapped), whole ensemble from left, whole ensemble from right, and a single video combining the others in a single frame. Each has visual timecode burned in.

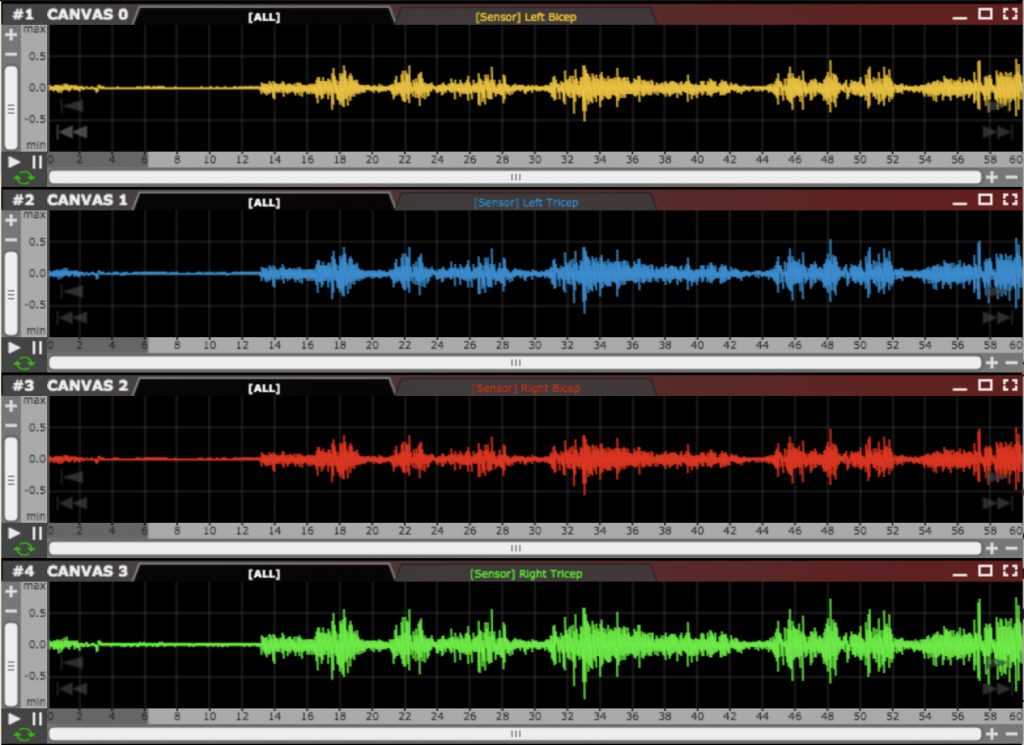

EMG data: bicep and tricep data from each arm, using wireless EMG sensors. 1kHz

Metadata: descriptions of the above and a pdf of the score.

he past few months I have been undertaking a literature review of books focused on conducting and music direction of contemporary music. This has included: conducting related pedagogical textbooks/manuals; books by and interviews with leading conductors; texts dealing with more general issues in performing contemporary music; and doctoral dissertations on issues relating to conducting.

he past few months I have been undertaking a literature review of books focused on conducting and music direction of contemporary music. This has included: conducting related pedagogical textbooks/manuals; books by and interviews with leading conductors; texts dealing with more general issues in performing contemporary music; and doctoral dissertations on issues relating to conducting.